GridCop: Monitoring Remotely Executing Programs for Correctness and Progress

|

GridCop: Monitoring Remotely Executing Programs for Correctness and Progress |

|

Computational workloads for many academic groups, small businesses and consumers are bursty. That is, they are characterized by long periods of little or no processing punctuated by periods of intense computation and insufficient computational resources. By aggregating large numbers of computers and users, the resource demands are ``smoothed out" across sub-groups even as demand remains bursty within sub-groups. Centrally managed software projects (e.g. Distributed.net, GENOME, NILE, SETI@Home) have sprung up to access idle machines to perform computations that would be economically infeasible to solve on committed hardware. Centrally managed systems like Condor and LoadLeveler have been developed to allow resources to be aggregated within permanent or ad-hoc organizations.

Centralized administration of resources exists because it allows a trusted entity -- system administrators -- to verify and track the trustworthiness of users given access to the resources, and it allows users to deal with a known, trusted entity. Certification of the user of a machine is almost always contingent on being an employee of the machine owner, or being certified by another organization which the user belongs to, and which is, in turn, trusted by the machine owner. This certification requires legal contracts that carefully delineate risks and responsibilities, staff to maintain accounts, accountants to monitor funding streams and tax consequences, and generally increases the overhead and real cost of acquiring and using computational resources. This in turn restricts the domain of applications that can be run on shared resources. Elimination of these overheads would allow automatic intermediation between consumers and providers of resources, allowing shared resources to blend seamlessly with locally owned resources.

The promise of increasing available processing powers during peak periods by trading cycles during idle periods, yet without significant additional cost, would motivate people to join a cycle-sharing system, but only one in which the following significant technical challenges have been solved.

Peer-to-peer (p2p) networks (e.g., CAN, Chord, Pastry, and Tapestry) have achieved widespread use as a content discovery mechanism. We propose using the same mechanisms for resource discovery and job assignment to solve the first challenge. Moreover, because of the self-organizing feature of p2p networks, it is easy for nodes to join, and leave, without the necessity of a central administrative organization and human intervention, which in turn obviates the need for a central organization and human intervention, and lowers administrative overhead.

The use of Java is extremely convenient, if not essential, for overcoming the second and third of these challenges (portability and safety). We utilize Java's universal virtual machine execution environment feature to enable applications to run on a wide variety of physical machines without any change (portability); We utilize Java virtual machine's sandboxing and security feature to provide resource provider a safe execution environment to host untrusted applications (host safety). Both of these attributes significantly lower the cost and risk for producers and consumers of cycles to join a network of shared resources. Moreover, research have shown that there are no inherent technical reasons for not using Java for high performance computing.

To solve the fourth challenge, we have designed a credit accounting system. A community of pooled resources will survive only as long as members are treated with a high (but not necessarily perfect) degree of fairness. Moreover, just as the larger economy can function well with a certain amount of fraud and noise in transactions and accounting, so should economies involved in sharing of computational resources. Thus our goal is not to produce a perfectly fair system, but instead to produce a sufficiently good system to enable wide scale sharing of computational resources. An incremental payment scheme is used in many modern economic activities to bound the amount of risk for both providers and consumers of a transaction to with the size of the incremental payment. Our credit system, along with the GridCop system, enables the risk for both submitters and hosts in our cycle-sharing system to be bounded to the size of an incremental payment.

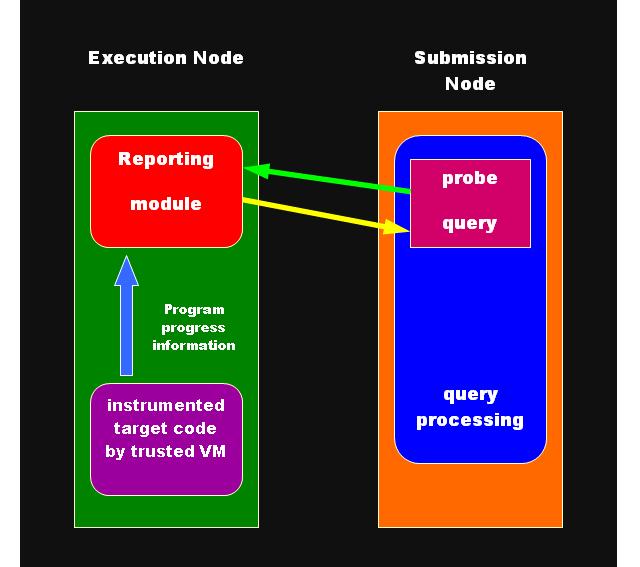

To solve the last challenge,

we have developed a remote job progress and correctness monitoring

system, GridCop, to solve the last challenge. The GridCop system

allows a computation on a remote, and potentially fraudulent, host

system to be monitored for progress and execution correctness.

Monitoring progress and correctness is especially important to the

submitter during a long running application because it provides

submitters confidence that their jobs will be executed by a remote,

otherwise untrusted host. Moreover, along with the above credit

system, GridCop enables the use of the incremental payment scheme.

GridCop Architecture Overview